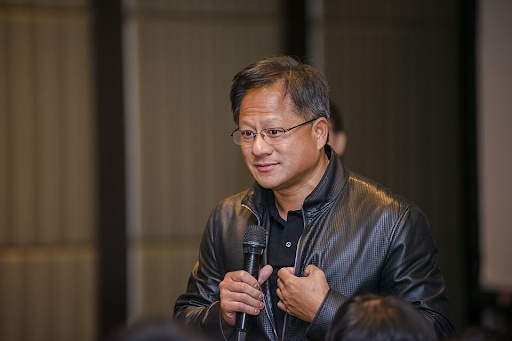

In the high-stakes world of AI infrastructure, scale is everything. Nvidia CEO Jensen Huang took this concept to the extreme at CES by unveiling a new architecture for the company’s upcoming Vera Rubin chips. Instead of just selling individual processors, Nvidia is pitching “pods”—massive clusters of over 1,000 chips designed to work as a single supercomputing unit.

This new architecture is built to handle the explosion in demand for AI processing. The Vera Rubin platform features a flagship server containing 72 graphics units and 36 central processors. When connected into pods, the computing power scales exponentially. Huang stated that these systems could improve the efficiency of generating AI “tokens” by ten times, addressing a critical bottleneck in the industry.

The “pods” are not just about raw speed; they are about enabling new types of AI. The complex “reasoning” required for Nvidia’s new Alpamayo automotive software demands low-latency, high-throughput computing. These pods provide the infrastructure necessary to train these advanced models and serve them to millions of users simultaneously.

This announcement comes as Nvidia faces increasing competition from tech giants like Google and chipmakers like AMD. By moving the goalposts to “pods” and massive clusters, Nvidia is leveraging its engineering lead to create systems that are incredibly difficult for competitors to replicate.

The Vera Rubin chips are set to arrive later this year, and their impact will be felt across the tech landscape. From powering the chatbots on our phones to training the brains of self-driving cars, Nvidia’s new supercomputing pods are destined to become the engine room of the digital economy.

Nvidia Redefines Supercomputing with New Chip “Pods”

11

previous post